Hello, community!

We recently concluded the problem-solving, beginner-friendly 2-day Learn With Google AI and Crowdsource ExploreML workshop on applied machine learning. We are sorry we could not provide a recording for the workshops due to technical issues, and hence why we are sharing a recap of our sessions and slides in this article.

If you attended the workshop, please endeavour to check your email inbox for additional details.

This recap is divided into two parts;

Day 2: Getting Started with Neural Networks and Understanding How Machine Learning Works. (This article.)

Disclaimer: All slides/images are credited to Google AI and Google Crowdsource facilitator resources. Any image that isn't, will be credited to their rightful owner/author otherwise.

Table of Contents

Introduction to TensorFlow Playground.

ii. Input Layer.

iii. Hidden Layer.

Introduction to Neural Networks

If you have read anything about machine learning you may have come across the term "Neural Network". They are a popular choice for machine learning models because of their ability to handle complex data and make accurate predictions where simpler models (such as linear and logistic regression, kNNs, and even boosting algorithms) might fall short.

Kindly watch the video below to get a sense of the problems neural network algorithms are solving for Google.

YouTube Video: How Does Your Phone Know This Is A Dog?

What is a neural network?

In short, it’s a mathematical function that maps a given input to the desired output.

Image adapted from becominghuman.ai by Venkatesh Tata.

In the introductory module, you learned about a couple of types of machine learning models such as classification, regression, etc. Simple models work fine work simple data but as soon as you want to interpret complex data like an image, audio, or even numerical data with lots of variables e.g. for housing prices = location + number of bedrooms + size + etc you need something capable of dealing with that complexity.

In reality, a neural network is a big complex mathematical function which looks nothing like what you see here but the diagram may help you see what's going on.

Like any other machine learning system, a model is trained with data fed into the model and it attempts to make a prediction. At first, the prediction will be essentially garbage but that feedback is used to improve the model as it keeps training.

Once the prediction quality is sufficient, the model is tested with new data and it makes a prediction based on what it learned from the training data.

What this diagram doesn't show is how each neuron is able to learn and improve based on feedback. Let's look more deeply at how a neuron is able to make a decision.

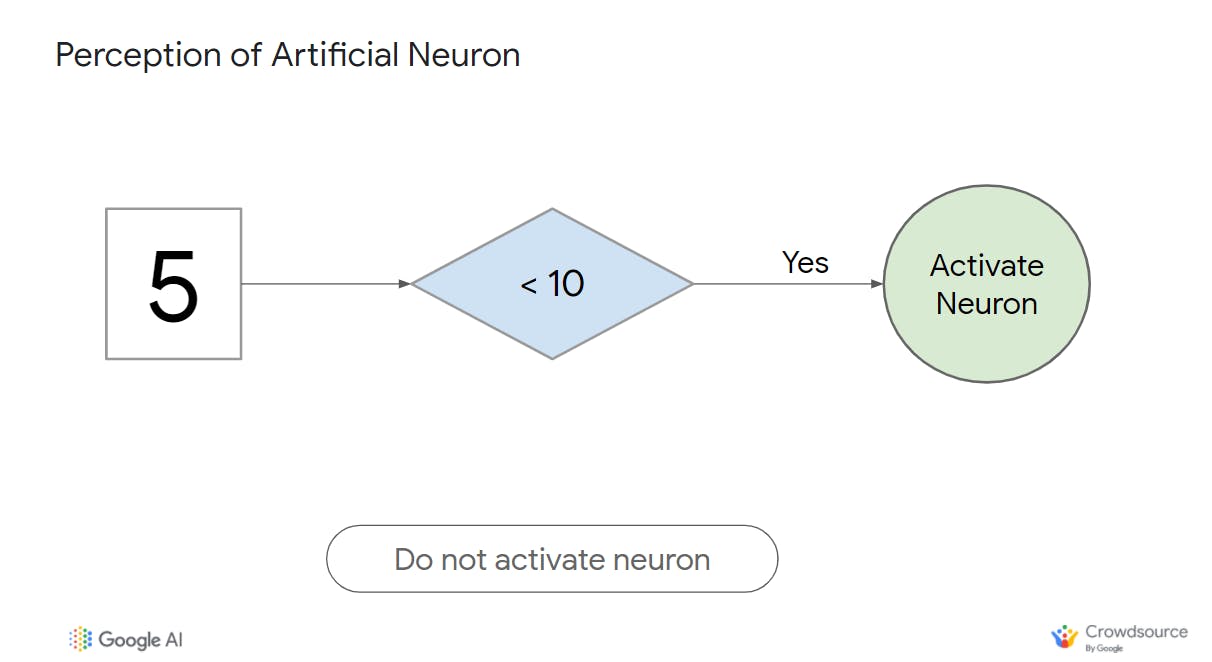

Activation Functions

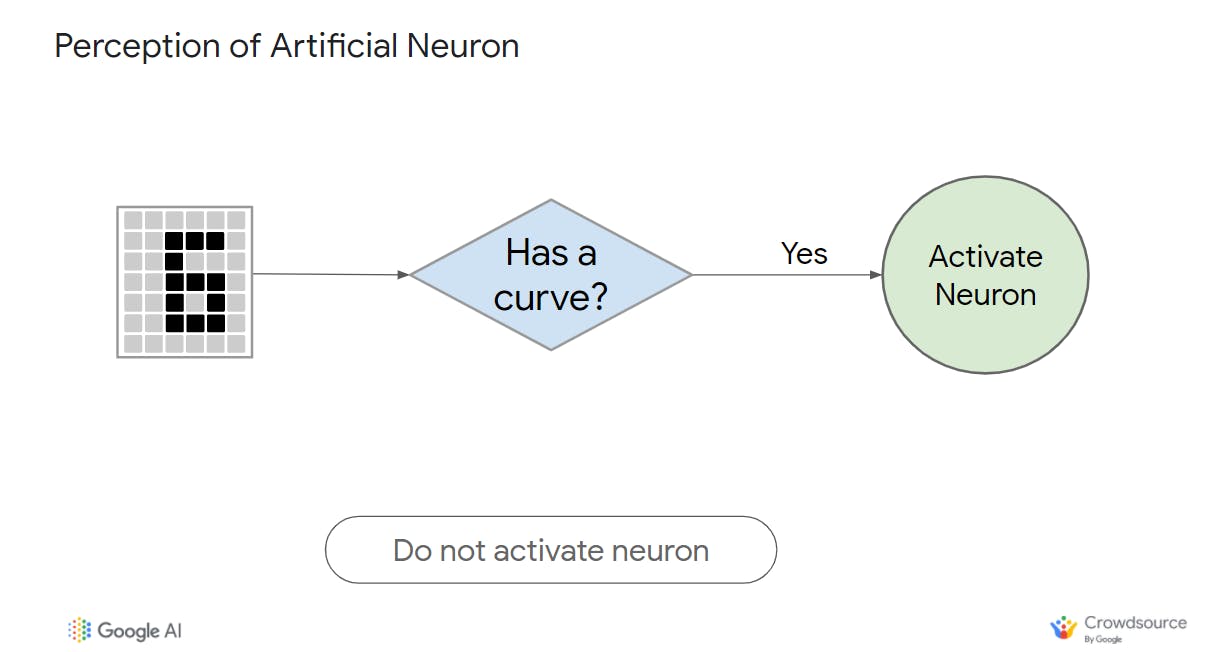

In the diagram above, we are applying an activation function to a neuron (the basic unit of a neural network). This is the mathematical way of setting the threshold or condition for activating a neuron. This is similar to if...then statements in programming e.g. If time > 11 am eat lunch.

The neuron checks to see if the input value meets the activation function's requirements. If it does not, then do nothing and pass no value onto any connected neurons in the network. If it does, do something to the data and pass the result as an input to the next neuron.

The more results are sent to a particular neuron, the stronger the "weights" of that neuron; which just means it is learning more about specific features of the data than other neurons.

This operation is carried out across all of the nodes in the network allowing very complex patterns to be learned and predictions to be made.

Let's look at a couple of examples...

Here;

The number 5 is the input to this neuron.

The neuron's activation function has learned to check if a number is < 10.

This input passes the test and so the neuron is activated.

This is one of the simplest forms of a neural network. It's effectively the same as linear regression or fitting a line to data.

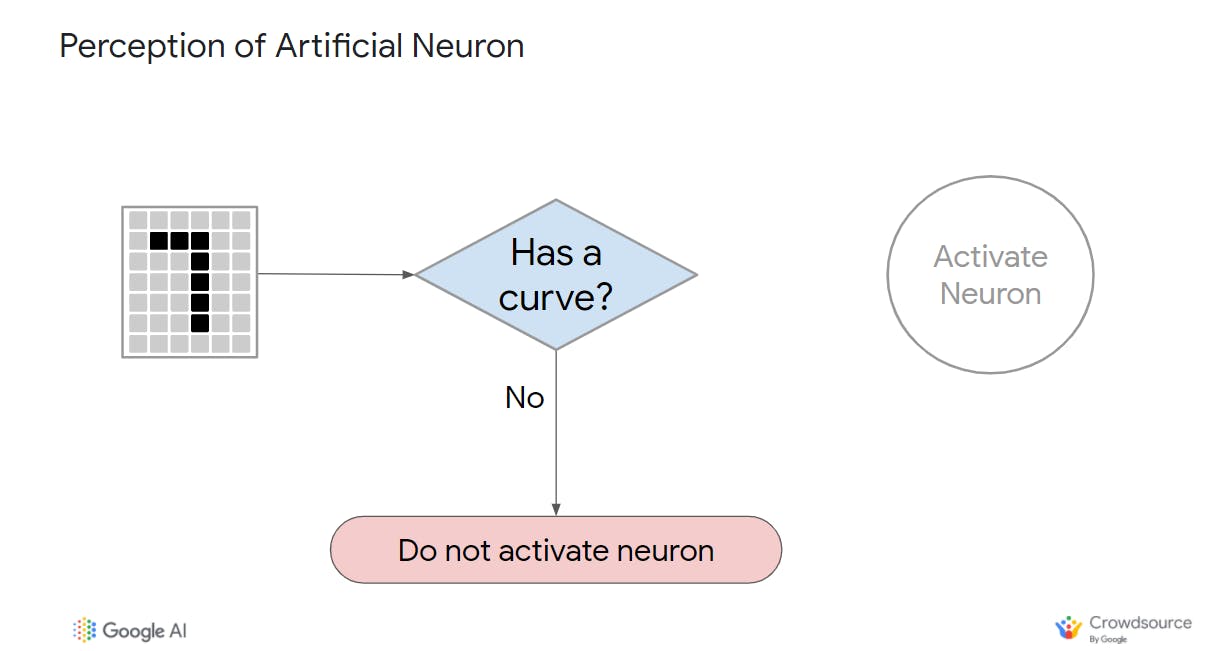

Let's see another example;

The input to this neuron is an image of the number 7.

The neuron's activation function has learned to check if an image like this has a curve

This input does not so it fails to pass the test and so the neuron is not activated.

Let's round-up with a final example;

However, the input to this neuron is an image of the number 6.

This input does have a curve so it passes the test, the neuron is not activated, and the information is passed to the next neuron.

Simple functions like these can combine to make more complex decisions. For example, the ability of this neuron to determine whether or not the input has a curve is due to other neurons checking to see for example if a pixel is black or not, or a group of pixels combining to detect an eyebrow in the input image.

If you are interested in learning more about activation functions, you can learn about the various neural network activation functions here.

What A Neural Network Looks Like

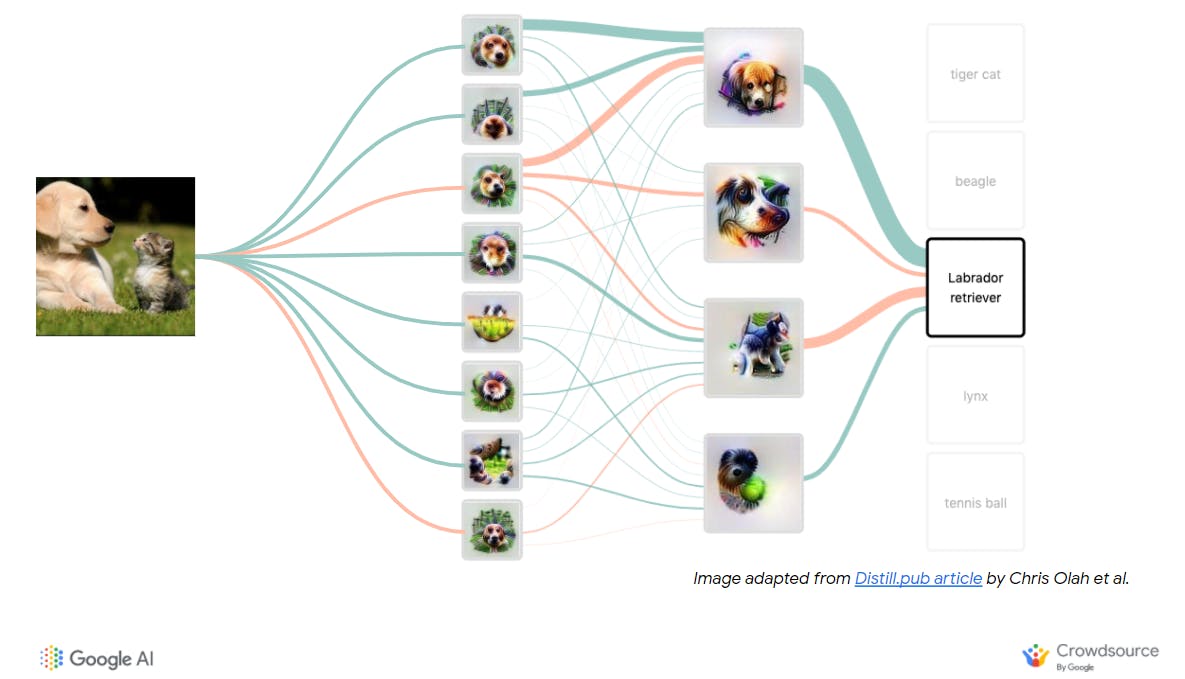

Image adapted from Distill Pub - Building Blocks of Interpretability by Chris Ola et al.

A neural network can be comprised of many hidden layers. These are intermediate steps to get from the prediction to the output. For example, a neural network may have layers to detect certain colours, and shapes and other layers to differentiate between certain types of animals and breeds.

It's important to clarify, the neural network does not know what a dog or cat is, it's just learning to do what we want it to do based on feedback and the patterns it detects.

But how does a neural network learn from feedback? Neural networks were loosely modelled after the biological brain. The connections between neurons can be strengthened and weakened using weights and biases. For example, when a dog receives a treat and a pat on the head for retrieving a ball, it is more likely to do that again.

Weights and biases allow a neural network to learn what pathways "work" for a certain task and bias the network to use those pathways again. This makes the network more effective and efficient at making predictions.

All of these complex calculations are accomplished far more quickly and often than a human could ever hope to do. It requires a lot of computing power to achieve high-quality results which is why Neural Networks were once thought infeasible but now data centres (also known as computing clouds) with greater computer processing power make neural networks a reality.

Introduction to TensorFlow Playground

You can work with machine learning tech in two different ways;

Machine learning is typically implemented using programming languages like Python and R though speciality libraries and platforms like Scikit-learn, Theano) and TensorFlow exist to make it even easier.

For those who do not yet write code or don't want to write code for machine learning models, there are APIs (application programming interfaces) offered to make machine learning an option for you or your application. An example is Google's Cloud Vision API and Google Cloud AutoML.

In this session, we are going to use TensorFlow Playground a tool for exploring and learning about machine learning without the need to write code.

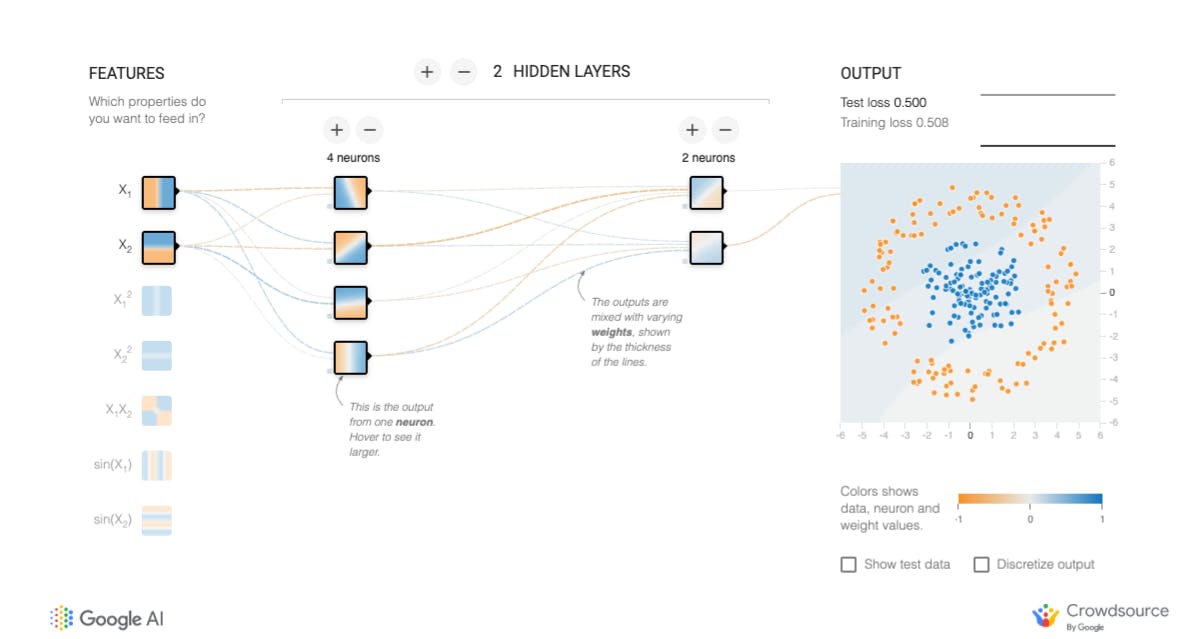

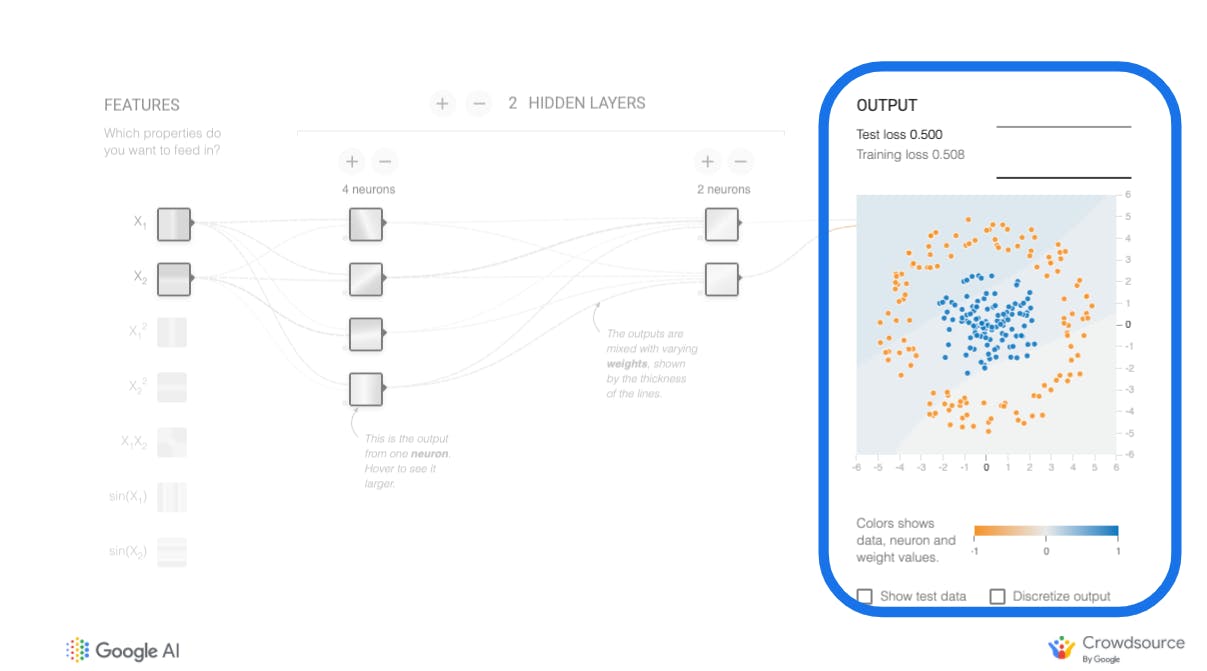

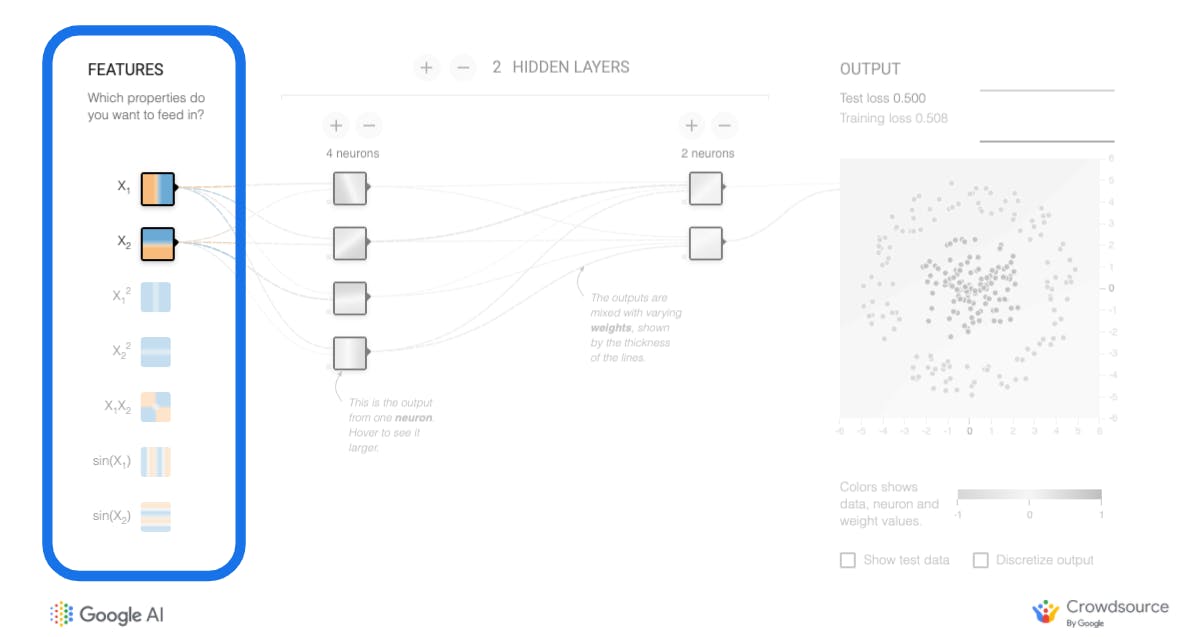

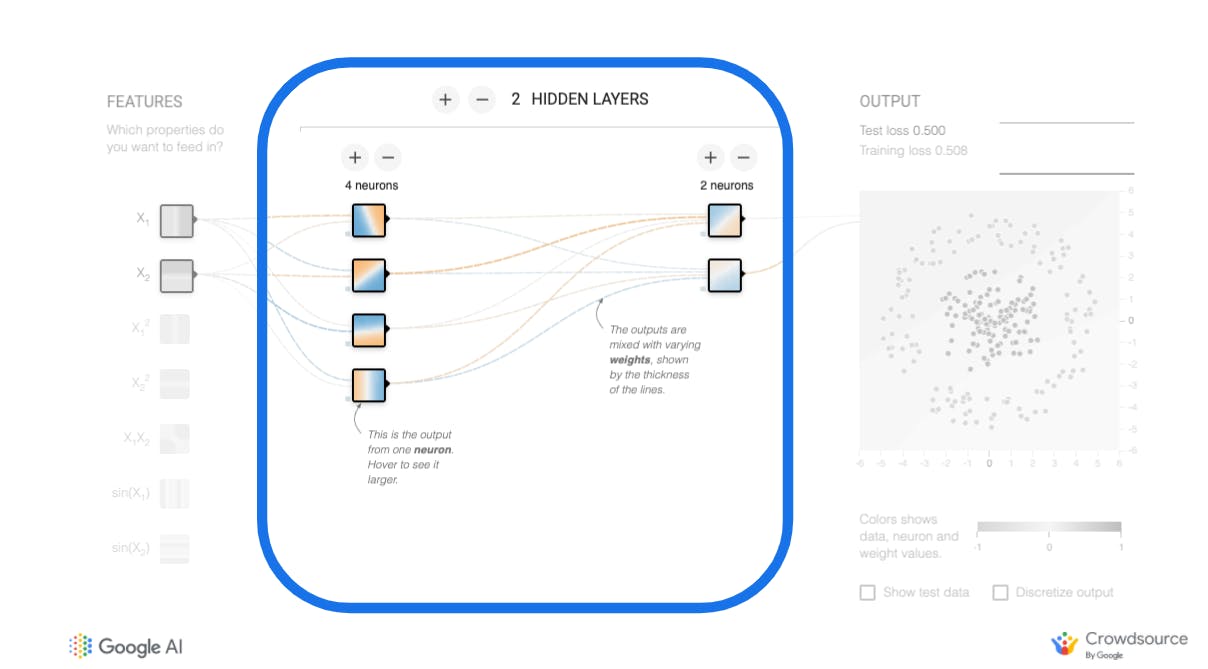

As you can see above, the playground contains the basic components of a neural network; input layer (features; your independent variables X), hidden layer (stacked neurons that would learn from the features of the data), and output layer (your dependent variable y).

Output Layer

Let's start with taking a broad look at what goes on in the output layer. While your goal with machine learning may be more interesting like "which of these x-rays contain evidence of cancer", we'll start simple. Your goal in the machine learning system below is to separate the blue and the orange dots.

Could you do this with only a line? I am guessing no, yes? What would you need in order to successfully separate the lines? You will most likely need a circle which can be made by combining a bunch of lines and functions.

Neural networks can be built to solve complex nonlinear problems—these are problems that cannot be solved with a straight (linear) line, like the problem above.

While you can see the output on the 2-D graph, the effectiveness of the model is measured with loss—how good (or bad) is the model doing incorrectly drawing a circle to distinguish between the blue dots and orange dots. Loss is the difference between your model's prediction and the data. In general, the lower the loss, the better your model.

Input Layer

On your left, you'll see your features from your dataset. Features are the information drawn from your data examples which distinguish one example from another. Think back to your secondary (or high) school days, remember the equation of a straight line y = m*x+ c? If so, think about the features of your dataset as the independent variable (your x's) and the output as your dependent variable (your y).

In TensorFlow Playground, these are simply mathematical functions like a vertical, horizontal line, and their combinations (sine, cosine, and so on).

Real-world examples of features from a dataset, and the objective they are trying to reach might include;

Objective: Classifying Dogs vs Cats.

Data: Thousands to millions of images of both cats and dogs; see here).

Features:

- Size of the dog or cat.

- Colour of the dog or cat.

- Length of the tail.

- Absence or presence of whisker.

- Absence or presence of a paw.

Objective: Classifying Numbers between 0 and 9

Data: Thousands of images of handwritten digits; see here).

Features:

Number of curves.

Number of lines.

Objective: Recommending videos to users.

Data: Learn more about YouTube recommendation here. If you want to go more technical, you can read the paper here.

Features:

Video topic.

Number of views.

Creator of video.

Video user-watched.

Videos user searched for in the past (what is called "search tokens").

Users' geographic location, language, age, and perhaps gender.

How much time a user spends on previous videos.

Objective: Predicting the Price of a House.

Data: Thousands of rows of historical data containing houses with various attributes and their prices (see here).

Features:

Location of a particular house.

Number of bedrooms.

Size of property.

Does the house have an attached or detached garage.

And so on.

Objective: Predicting which Ad to Display to a user.

Data: Thousands to millions of numerical data on ads previously served to a user and whether they clicked on the ad or not. (see here.

Features:

User's query.

Type of device.

Time of day.

Location of the user.

And so on.

Hidden Layer

This layer is where the complex patterns in the data are discovered by stacked neurons that form the "deep" part of deep learning. Permutations of the featured data are tested on how well it separates the data while training. The outcome is graded, this is known as loss. This is used as feedback to improve the model (during training).

Although there is no consensus amongst experts on the depth of a network to be considered "deep", I'd say the higher the number of hidden layers (typically two hidden layers and above), the "deeper" the network—hence the term "deep learning".

There are some rules of thumb for crafting neural networks but it's not likely I could tell you before what the right answer is, a high-quality neural network takes experimentation and iteration. Deep learning is part science and part art—so you'd need to be creative about your experiments.

TensorFlow Playground Exercises

If you want to play with the TensorFlow playground and learn how neural networks work under-the-hood, these exercises will help you understand how they work.

You will carry out the tasks as instructed in the pages here and answer the resulting questions, before scrolling down to check the correct answers.

Exercise 1: First Neural Network. Please click here to access it.

Exercise 2: Neural Network Initialization..

One important point you may have noticed from exercise 1 is; with traditional methods you are most likely going to get the results you expect because there is a simpler, logical, and typically deterministic outcome. Consistent and expected results cannot be guaranteed with machine learning because it is empirical, some of these ML algorithms also have some form of randomization built in them, and your input data may change often.

Exercise 3: Neural Network Spiral.

Limitations of Neural Networks

To understand the limitations of neural networks, you are going to be training your very own neural network model without writing a single line of code and all from your web browser (or mobile phone browser).

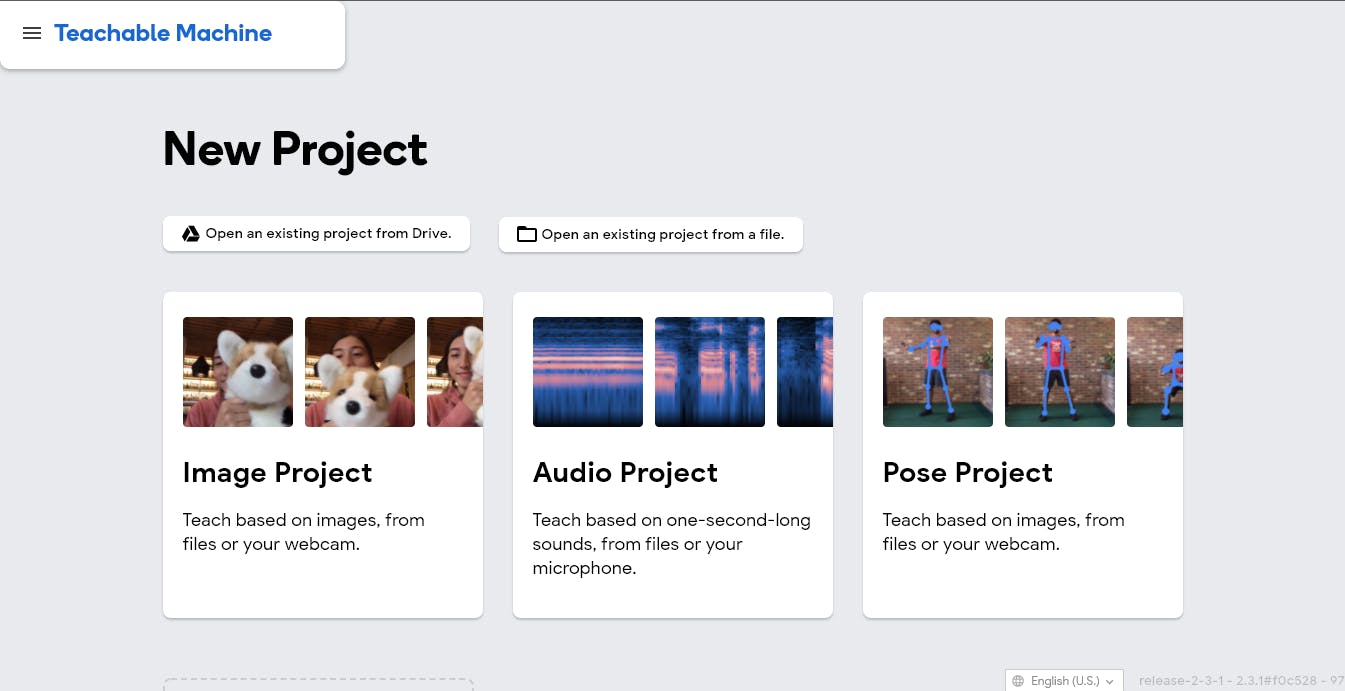

In this experiment, we are going to use Teachable Machine, another online tool for machine learning. Teachable Machine is powered by Mobilenet, a neural network optimized for size so it can run on your mobile phone.

See how it works below;

Based on the video you just watched above and with your knowledge of how machine learning works and its limitations, can you come up with a way to confuse the machine learning system?

Recall you can confuse the ML system anywhere from not defining the objective properly, to collecting dataset that does not represent the problem you want to solve.

For example, you might want to train the system to distinguish between different colours of clothes in your store. If you only train the system on red, green, and black clothes, you may confuse your machine learning system by asking it to predict the colour of green cloth—essentially what we have been discussing; garbage-in-garbage-out!

Exercise: Take out 10-15 minutes of your time to build your own machine learning model without writing a single line of code.

In your exercise, you can decide to train the model to distinguish between a high five and a knuckle, then provide it with a picture of your first two fingers to see if it would recognize it.

Click here and return back to this tab when you do.

You will notice that there are three (3) projects you can take on; image project, audio project and pose project.

Click Image Project.

Learn how to gather your image data here.

Learn how to train your model here.

Learn how to export and make use of your model here.

Discussion points:

What did you try that worked or didn't work?

Can you think of an application where this could be problematic?

How would you try to address that issue?

Review and Next Steps

You have now come to the end of a summary of what we covered in day 2 of the workshop. In this article, you must have learnt;

What a neural network is: A machine learning algorithm that (sort of) mimics the function of the brain by using mathematical functions to recognize patterns in data.

Neural network is useful when you have a significantly complex problem to solve (one above the "pay grade" of simpler ML models like kNN), and you have a large amount (or corpus) of data.

Neural network are "experts" at detecting and recognizing patterns in large amounts of data. This is something that simpler machine learning models might not be able to do.

We learnt a dire limitation of neural networks is in fact their biggest strength—they are only as good as the input data you feed into them. Feed-in data in quality (good representation of the problem) and quantity (large amounts).

If you attended the workshop, we do hope you had a great time and learnt a lot to help you on your journey in understanding or applying machine learning. (Check your email for other workshop-related information, including details on how you'll pitch your group presentation.)

If you didn't, we sure hope you learned a lot from this article.

Next steps? We urge you to join our AI and data learning community by clicking here.

Interested in our another virtual event from our community? We have just the right event to round up a crazy year.

Register using: bit.ly/cr-ads-20

You can share this opportunity with others as well by tweeting or sharing the message below on your social media feed.

2020 has been a wild year for most data scientists! Join us @PHCSchoolOfAI as we end the year with a career recess virtual event, taking the much-needed break from work to interact with other data scientists and learn from the practical experiences of others: bit.ly/cr-ads-20

Did you enjoy the article? Did you find it useful? Send us a comment below and react to it so others can easily find the article as well.

Or...

Did we miss anything? Do you have any feedback? Did you spot any errors? Are you facing any challenges with the links anchored or other details? Please let us know in the comments below.